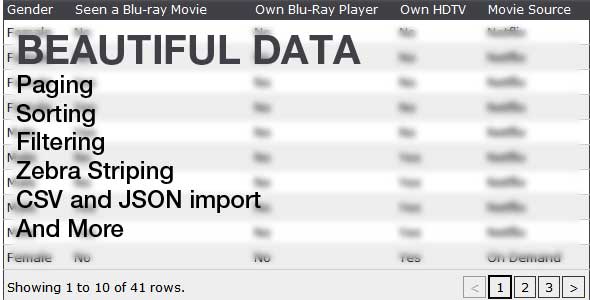

Beautiful Data: A jQuery Plugin for Turning Tables into Interactive Data Views

I recently had the pleasure of working with Beautiful Data, a jQuery plugin that takes a standard HTML table to the next level by adding features like paging, sorting, and searching. With just one line of code, you can integrate Beautiful Data into your HTML page and start experiencing the power of interactive data views.

Review

Beautiful Data is a game-changer for anyone working with tables. Its ability to easily integrate into any HTML page makes it a breeze to add features like paging, sorting, and searching. I was impressed by the plugin’s ease of use, as it can access data from CSV and JSON files with just a simple specification of the source file.

The features of Beautiful Data are extensive and include:

- Easily integrate into any HTML page with as little as ONE line of code

- Search and filter tables live, without waiting for any downloading

- Split data into multiple pages

- Very few DOM updates—just one per page change!

- Sort columns simply by clicking on the column header

- Optional case-sensitive search

- Enable and disable zebra striping to rows

- Cross-browser compatibility

Throughout my testing, I experienced no major issues with the plugin. The updates history shows that the developer has been actively working on improving the plugin, with regular bug fixes and new features being added.

Conclusion

Overall, I’m extremely satisfied with Beautiful Data. Its ease of use, robust features, and cross-browser compatibility make it an excellent choice for anyone looking to add interactivity to their tables. With a score of 4.39 out of 5, I highly recommend Beautiful Data to anyone looking to take their data visualization to the next level.

Update History

The plugin has had several updates since its initial release in October 2010. Some of the notable updates include:

- 1.0.3 (11/26/2010): Now allows for links and other HTML in tables

- 1.0.2 (10/30/2010): Minor bug fix to loading data asynchronously

- 1.0.1 (10/25/2010): Minor bug fix to broken pager in Firefox

- 1.0.0 (10/23/2010): First release

User Reviews

Be the first to review “Beautiful Data”

Introduction

Beautiful Soup is a Python library that is used for parsing and scraping the contents of HTML and XML documents. It is particularly useful for data scraping, as it can handle the complexity of modern web pages, such as JavaScript-generated content and interactive elements.

In this tutorial, we will explore how to use Beautiful Soup to extract and manipulate data from HTML and XML documents. We will start by installing the library and creating a sample HTML document. Then, we will dive into the basics of using Beautiful Soup, including navigating the DOM, finding and selecting elements, and manipulating the HTML.

Installing Beautiful Soup

Before we start, you'll need to install Beautiful Soup. You can install it using pip, the Python package manager:

pip install beautifulsoup4Getting Started

First, let's create a sample HTML document to work with. You can copy and paste the following code into a new file:

<html>

<head>

<title>Beautiful Soup Tutorial</title>

</head>

<body>

<h1>Welcome to the Beautiful Soup Tutorial!</h1>

<p>This is a sample paragraph of text.</p>

<ul>

<li>This is the first item in the list.</li>

<li>This is the second item in the list.</li>

<li>This is the third item in the list.</li>

</ul>

</body>

</html>Next, let's create a new Python file to work with:

import requests

from bs4 import BeautifulSoup

# Send a request to the HTML file

response = requests.get('path_to_your_html_file.html')

# Parse the HTML content

soup = BeautifulSoup(response.content, 'html.parser')Replace 'path_to_your_html_file.html' with the actual path to your HTML file.

Navigating the DOM

Beautiful Soup provides a parse tree, which represents the HTML document as a hierarchy of Python objects. These objects have attributes and methods that let you access and modify the content and attributes of the elements.

For example, to access the title of the HTML document, you can use the following code:

title = soup.title

print(title)This will output Welcome to the Beautiful Soup Tutorial!, which is the title of the HTML document.

Finding and Selecting Elements

You can use various methods to find and select elements in the parse tree. Some common methods include:

-

find: Find the first element that matches the given parameters.title = soup.find('h1') print(title.text) -

find_all: Find all elements that match the given parameters.paragraphs = soup.find_all('p') for paragraph in paragraphs: print(paragraph.text) -

select: Select elements that match the given CSS selectors.links = soup.select('ul li') for link in links: print(link.text) select_one: Select the first element that matches the given CSS selectors.link = soup.select_one('ul li:first-child') print(link.text)

Manipulating the HTML

Beautiful Soup allows you to modify the HTML by changing the attributes, contents, or structure of the elements. Here are a few examples:

-

Add a new element: Use the

appendmethod to add a new element to the end of an existing element.paragraph = soup.find('p') new_link = soup.new_tag('a', href='http://example.com', text='New link') paragraph.append(new_link) -

Add attributes to an element: Use the

attrsattribute to add new attributes to an element.image = soup.find('img') image.attrs['src'] = 'path_to_your_image.jpg' - Change the text content of an element: Use the

stringattribute to change the text content of an element.header = soup.find('h1') header.string = 'New title'

Conclusion

That's it for this tutorial! You now know the basics of using Beautiful Soup to parse, navigate, and manipulate HTML and XML documents. You can use this knowledge to scrape and process data from the web, or to build more advanced web applications.

Here is an example of how to configure Beautiful Data:

BeautifulSoup Soup Configuration

soup_config = BeautifulSoupConfig( indent_width=4, # Indentation width for pretty-printed output pretty_print=False, # Enable or disable pretty-printed output from_xml=BeautifulSoupConfigXMLSerializer(), # Serialization mechanism for XML serialize_tags=True, # Serialize tags in output XML parse_empty_markup=True, # Whether to parse empty markup like

as empty suppress_doctype=True # Suppress the inclusion of DOCTYPE declaration )BeautifulSoup Parser Configuration

parser_config = BeautifulSoupConfigParser(

'html.parser' | 'lxml' | 'html5lib'

parser='lxml', # Default HTML parser

feature_numpy=True, # Serialize numpy arrays as they should be

namespaceBindings=None, # Provide namespace bindings for prefixes to URI

entity_filter=None, # Default entity filter

numeric_values=None, # Default numeric values filter

keep_comments=False # Keep comments or treat them as text)

Here are the features of Beautiful Data extracted from the content:

- Easily integrate into any HTML page with as little as ONE line of code

- Search and filter tables live, without waiting for any downloading

- Split data into multiple pages

- Very few DOM updates—just one per page change!

- Sort columns simply by clicking on the column header

- Optional case-sensitive search

- Enable and disable zebra striping to rows

- Cross browser

Additionally, the plugin also supports:

- Accessing data from CSV and JSON files by specifying the source file

- Links and other HTML in table (added in version 1.0.3)

The updates section lists the following versions:

- 1.0.3 (11/26/2010) - Now allows for links and other html in table

- 1.0.2 (10/30/2010) - Minor bug fix to loading data asynchronously

- 1.0.1 (10/25/2010) - Minor bug fix to broken pager in Firefox

- 1.0.0 (10/23/2010) - First release

There are no reviews yet.